|

Theory of Meaningful Information

|

Table of Contents

Theory of Meaningful Information

Boris Sunik

boris.sunik@generalinformationtheory.com

Preface

This theory focuses on meaningful information and intelligence, following a rather unconventional way of problem solving. Hence, a few words regarding its approach even before starting the book. In its most general form, this approach can be formulated as follows:

Ø The only kind of meaningful information recognized by human beings is a voiced or written text composed in one of the human languages. While the natural languages (English, German, Chinese, etc.) possess unrestricted expression power and unlimitedly extensible vocabulary, they produce ambiguous texts that cannot be adequately understood by a computer.

Ø Unambiguous human languages can be found among artificial languages. All these languages have limited expression abilities, and only one kind of them, namely the high-level programming languages, are actually used. The expression power of such a language is restricted to a representation of binary sequences (bits, bytes and sequences of bytes) and operations with them. Thus, the notion “car” can routinely be used in English to designate any real or imaginary car, but the definition of such a notion in a C++ program, actually designates a sequence of bytes residing in the storage of a computer running this program.

Ø C++ is the most prominent programming tool of our time. It is the only compiling language actually used and most of interpreting languages such as C#, Java, JavaScript and so on also follow its syntax and conceptual system. Most complex applications, including AI algorithms, are programmed in this language. However, similar to other programming languages, C++ cannot be used for specification of the contextual information of programmed algorithm, like rules of program use, characteristics of associated external objects existing outside of computer storage and so on.

Ø TMI defines the universal representation language T, which can be viewed as an extension of C++ by non-executable representations. In contrast to C++, T has an unrestricted representation world and allows the explicit specification of arbitrary real or imaginary entities in terms of traditional C++ concepts as class, object, function, parameter etc. This language is able to represent both executable code and non-executable specification of external entities.

Thus, consider a modern robot with a lot of processors controlling its various parts. Currently, the code base characterizing such a robot consists of a bunch of software programming these processors, but in the case of T it can be a single solution specifying both the executable software and non-executable specifications. The latter part can describe the rules according to which this robot interacts with different external objects, the structure and functionality of these objects, procedures of information collection and so on.

The TMI view of intelligence is based on conceptualization of information-related features of bio-organisms. The ability for information utilization is already present in primitive multicellular organisms and its steady evolutionary development led to the creation of its most complex form – intelligence. While amphibians and reptiles rarely possess any intelligence, this feature exhibits itself in mammals, reaching its peak with Homo sapiens. TMI defines a model called Homo Informaticus, which can be used for the formal representation of the complete intellectual and physical activities of a human being.

TMI allows fixing the extremely dangerous drawbacks of modern neural networks resulting from their ability to produce new concepts. As known, the practical application of neural networks became possible when researchers introduced networks with many neuron layers which made pattern recognition really effective. The specific of this technic is that new knowledge can be obtained from information already processed by a neural network. It just has to analyze the obtained values once more and get new patterns, after which the process can be repeated again. Taking into account the complexity and persistent sophistication of AI systems, this feature unavoidably leads to a situation in which a neural network can break out of control, for example, because it will analyze its own behavior, and change it due to this or some other reason.

TMI offers the ultimate fix to this problem by allowing specification of the complete application environment of a neural network. The code, containing the formal definition of all concepts and instances associated with the network’s task, can be used by a supervising controller, which will disable any network’s attempt to generate a concept not included in the fixed list of allowed entities.

1. Introduction

We live in the age of applied sciences. Consider the role of applied biology responsible for the unbelievable progress in medicine or applied physics and applied chemistry, forming the basis of modern industry and modern infrastructure in general. It is nearly impossible to imagine our society without the applications of social sciences like economics, sociology, social psychology, political science, criminology and so on. Frequently ignored, however, is the fact that the boom of applied sciences was only possible because of the fundamental sciences, without which the development of the last 150 years would never have occurred.

The only major type of activities remaining without adequate theoretical conceptualization is that which is concerned with meaningful information. While technical aspects of information transmission are effectively covered by the information theory of Claude Shannon, the meaningful information never possessed any relevant theoretical support. Despite more than a hundred years of research devoted to the formalization of meaning, no research dedicated to the subject was ever able to produce an applicable theory having any impact on the practical information-related activities.

This situation is the result of a deep chasm between theoretical research and practical application over the last few decades. These fields have mostly developed independently from one another. In order to take the necessary step forward in understanding and developing a theory of meaningful information, there is the need to reunite them.

In the theoretical sphere, the first works devoted to this subject had already started in the 19th century with the definition of semantics. In the next century, they became very extensive and were extended to the heterogeneous field including multiple ideas from computing, cognitive sciences, linguistics, medicine and others. Regretfully, a vast amount of energy was used to build a bridge to nowhere - the situation also remains the same in the present century, in which software developers obviously seems to have lost interest in this research because of its complete uselessness.

The failure of theory however never hindered applied developments, which happened to be really effective. The only actual working methods and tools ever employed for representation and processing of meaningful information are the mainstream programming languages used for the creation of both conventional and intelligent applications. Currently their number barely exceeds a dozen, with one of them – C++ - playing an especially outstanding role.

This language, also designated as C/C++, is the only compiled language widely used at this time. Other mainstream programming languages are interpreters, most of them also implementing certain variants of the C++ conceptual system (C# and Java are the most prominent examples of this approach). All mainstream languages were developed in a purely pragmatic way without applying whatever scientific methods[1] and are acquired by programmers intuitively like driving a car. Learning by doing is highly effective in practical work, however, the concepts formed in the heads of specialists in this inductive way are based on tacit knowledge and do not permit deep scientific conceptualization.

The discrepancy between academic research and the realities of everyday software development results from the fundamentally incompatible approaches. While the academic research never succeeded in creating the effective programming tools based on the mathematically correct solutions, the practical programming occurred to be extremely successful by employing programming languages built on non-mathematical basis.

It would be incorrect to say that practical programmers were never interested in scientific research, in reality though, this disinterest is a rather recent phenomenon developed over the last thirty years. A completely different spirit prevailed at the start of the computer era when programmers struggled to find an applicable alternative to a very exhausting and slow machine code programming. Science was seen as the only hope and after the first high-level programming language FORTRAN appeared, the practitioners became theoreticians and produced a rigorous mathematized theory of the programming language. This resulted in viewing all non-mathematical approaches as supposedly non-scientific.

Such an interpretation of programming was not accidental, as the first computers were developed for mathematical calculations and, as a result, most programmers of that time were mathematicians and logicians who viewed programming as a branch of mathematics. The obvious divergence between scientific concepts and the realities of everyday software development was not perceived as a major problem, because mainstream programming languages were considered a temporary solution needed until the theoretically correct programming tools reached maturity. Neglecting mainstream programming went so far that theoreticians failed to produce a generally accepted definition of a programming language because such a definition could not be made in the conceptual coordinates of recognized theories.

This not really effective approach was tolerated in the times of expensive mainframes, but completely lost its appeal after the appearance of C++ and cheap personal computers. In retrospect is clear that a zero output from many decades of intensive mathematic-driven research is the ultimate proof of the wrongness of its key postulate once (presumably) formulated by Galileo Galilei: “The Book of Nature is written in the language of mathematics”. This idea widely present in distinct disciplines actually killed the theoretical programming by automatically rejecting all non-mathematical concepts as unscientific. While no self-respecting scientist will ever study unscientific material, official science is basically unable to leave its convenient blind alley and propose any adequate concepts. While no self-respecting scientist would ever engage in non-scientific studies, official science has basically turned a blind eye to the reality of programming and continue to do so today.

The success of pragmatically developed programming concepts, however, does not mean that proper fundamental software research is no longer considered. On the contrary, it has probably never been as topical as it is now, due to the immense damaging potentiality possessed by failed and seemingly long forgotten theories of the traditional computer science. The reason is the AI theory, which was originally developed as a branch of the latter, reuses many of its results, and is likely obsessed with mathematically correct methods. This theory has finally arrived now - thousands of articles describing miscellaneous formal concepts are printed in multiple AI publications every year (2-3 examples in footnote). That said, however, does not bring us one iota closer to the understanding of what AI is and how it functions.

The results of this dead-end campaign is already seen in the level of discussions about the dangers of AI development. Not just average AI developers, but also such world-known prominent figures as multibillionaire Elon Musk, the late physicist Stephen Hawking and computer scientist Ray Kurzweil, have weighed in on the subject. However, they are also absolutely helpless and their opinions completely lack any theoretically sound arguments.

Currently, AI specialists operate with commonsense considerations and imprecise historical examples[2] and if this situation is allowed to continue, there is a pretty good chance that in the not so distant future, a team of AI developers might underestimate the self-learning capabilities of their own creation and bring an end to the era of human civilization on this planet.

This book attempts to overcome the problem by proposing the Theory of Meaningful Information (abbr. TMI), which is created by extending the universal methods of information formalization developed in programming outside of its original domain. TMI is a general information theory which includes:

· The information theory providing the OO view of real and imaginable worlds;

· The intelligence theory that defines the features of both natural and artificial intelligences and allows the formal specification of the strong AI;

· The language theory, which includes the theory of the universal representation language allowing the universal representation language T to be formulated.

· The first theory is a general conceptual system, the two following theories are its subsets devoted to the features of intelligence and languages respectively.

TMI is an axiomatic theory, defining the key notions information, knowledge, intelligence, language, sign solely on the basis of their functional characteristics. In this, it differs from the other approaches of information science restricting these terms to the entities of their interest, as do most of the information-related theories understanding a language as a natural human language, knowledge as human knowledge etc.

The theory is based on an overall definition of information, which can be applied to information of every kind, level and complexity. The definition of information enables a view of the world in terms of objects, actions, relations and properties. Information is considered as the feature manifesting itself in the relations between certain real world entities. TMI provides both the uniform view of all kinds of information and the universal language actually supporting the requested representations.

TMI does not employ already known conceptions and approaches devoted to this subject and basically rejects all mathematically based concepts of meaningful information as irrelevant. This theory cannot be assessed in terms of approaches originating from the domain of formal logic and mathematics as first order calculus, semantic nets, conceptual graphs, frames and ontologies, which constitute the basics of formal languages traditionally used in the realm of knowledge representation like OWL (Smith, Welty, and McGuiness 2004), Cycl (Parmar 2001), KM (Clark and Porter 1999), DART (Evans and Gazdar 1996) and others.

Chapter 2 contains the problem statement.

Chapters 3 - 5 detail theories of information, intelligence and language respectively. Chapter 6 introduces the language T.

Examples of representations are presented in Chapter 7.

2. Problem Statement

2.1. The Classical Information Sciences

The word “information” is derived from the Latin word “informatio” meaning to form, to put in shape, which designated physical forming as well as the forming of the mind, i.e. education and the accumulation of knowledge. This relatively broad meaning was narrowed down in the middle Ages when information equated into education. It had been continually refined up until the first half of the twentieth century when it was equated with “transmitted message”.

Today, various kinds of information have been intensively studied by numerous information-related sciences like communication media, information management, computing, cognitive sciences, physics, electrical engineering, linguistics, psychology, sociology, epistemology and medicine. While every discipline concentrates on specific features of information and ignores others, they produce multiple incompatible approaches making generalizations of the studied subject virtually impossible. This is even more complicated by the widespread practice of defining information with the help of other concepts like knowledge and meaning, while simultaneously ignoring related concepts like data, signs, signals.

Taking into account that the absolute majority of informational studies are trivial research without any pretension for broad generalizations, it is no wonder that the Information Age ― as often referred to as nowadays ― has failed until now to produce a general information theory despite several ambitious attempts in developing universal approaches.

2.1.1. Information Theory of Claude Shannon

The theory was very efficient in solving practical problems of electrical engineering. Though the designation “Information Theory”, under which Shannon’s work is commonly known, is mainly a misnomer spontaneously produced by the scientific community on the surge of popularity of his approach. Shannon himself never referred to his work in such a manner. The name Shannon gave his theory in the first draft in 1940 was “Mathematical theory of communication” and he retained the same name in a publication in 1963 (Claude Elwood Shannon and Weaver 1963).

He also deliberately evaded consideration of the semantic characteristics of information stressing the aforementioned publication: “Frequently the messages have meaning; that is they refer to or are correlated according to some system with certain physical or conceptual entities. These semantic aspects of communication are irrelevant to the engineering problem” (Claude Elwood Shannon and Weaver 1963). The citation of Weaver from the same publication “The word information, in this theory, is used in the special sense that must not be confused with its ordinary usage. In particular, information must not be confused with meaning...”.

These citations actually define the limits of Shannon’s theory concerned with the processes of information transmission. This theory however showed no interest in studying the main characteristic of information, which is the ability to designate meaning.

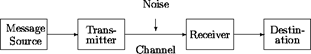

In some paradoxical ways, the channel model of Claude Shannon (Error! Reference source not found., author’s own illustration) is very precise in defining the semantics’ components of information ― they are exactly those parts of the complete picture, which are omitted in this model. That is to say, the sender’s semantics ends before (left of) “Message Source” and the receiver’s semantics starts only after (right of) “Destination”.

|

Shannon’s channel model. |

|

Figure 2‑1

|

Furthermore, the subject of Shannon’s Theory cannot even be considered as the attribute of information because primitive information entities can be generated and consumed without any transmission processes whatsoever when the message source and the message destinations are the same (see 3.1.7 for details).

In fact, Shannon’s theory is no more than a specialized theoretical framework restricted to the task of transmitting information from one place to another.

2.1.2. Semantic Studies

Another approach is represented by semantics, which studies the meaning of signs and relations between different linguistic units as homonymy, synonymy, antonyms, polysemy and so on. A key concern is how meaning attaches to larger parts of text, possibly as a result of the composition from smaller units of meaning. Traditionally, semantics have included the study of sense and denotative reference, truth conditions, argument structure, thematic roles, discourse analysis, and the linkage of all of these to syntax.

The study of semantics started in the 19th century. Among the early pioneers in this field were Chr. Reisig, who founded the related field of study “semasiology” concerned with the meanings of words, and the French philologist Michel Jules Alfred Bréal, who proposed a "science of significations" that would investigate how sense is attached to expressions and other signs. (Lyons 1977, 619).

In 1910 the British philosophers Alfred North Whitehead and Bertrand Russell published the Principia Mathematica, which strongly influenced the Vienna Circle, a group of philosophers who developed the rigorous philosophical approach known as logical positivism. Along with the German mathematician Gottlob Frege, Bertrand Russell also expanded the study of semantics from the mathematical realm into the realm of natural languages. Logical Positivism, the contemporary current of thought trying to create criteria to evaluate statements as true, false or meaningless, thereby making philosophy more rigorous, was concerned with the ideal language and its characteristics, whereas natural languages were regarded as more primitive and inaccurate.

In contrast, the philosophy of “ordinary language” (Ludwig Wittgenstein) saw natural language as the basic and unavoidable matrix of all thought, including philosophical reflections. In his view, an “ideal” language could only be a derivative of a natural language.(Lyons 1977, 140).

Modern approaches initiated by theories of the U.S. linguists Zellig S. Harris and Noam Chomsky went to the development of generative grammars, which provided a deeper insight into the syntax of the natural languages by demonstrating how sentences are built up incrementally from some basic ingredients.(Yule 2006, 101)

The fundamental restriction of semantics making it unfit as the base of any universal information theory is its exclusive connection to a specific kind of meaning which is the one transmitted with the help of a natural language by human beings. Despite the fact that this is the more complex kind of meaning it is surely not the only possible. Types of meaning basically ignored by semantics are tacit knowledge possessed by human beings, non-human knowledge acquired and used by various multicellular and even some unicellular organisms as well as knowledge of computers.

Another restriction of semantics is that it does not take into account the variability of meaning. Transmission of meaning with the help of some language signs represents only one meaning’s aspect. Another is the creation and the extension of abilities for expressing and understanding meaning. A newborn baby does not possess knowledge and can neither send nor receive meaning with the help of a natural language; however its ability to send and receive some meaning will evolve during his/her lifetime. By ignoring the variability of meaning, semantics deprives itself from any chance in understanding its nature.

2.1.3. KR Methods

The third approach is embodied by knowledge representation, which is an area of artificial intelligence concerned with the methods of formal representation and use of knowledge. According to (Sowa 2000) knowledge representation is the application of logic and ontology to the task of constructing computable models for some domain. It is a multidisciplinary subject that applies theories and techniques from: a) logic defining the formal structure and rules of inference; b) ontology defining the kinds of things that exist in the application domain; and c) computation that supports the application. Logic encompasses all methods and languages of formal data representations like rules, frames, semantic networks, object-oriented languages, Prolog, Java, SQL, Petri nets, Knowledge Interchange Format (KIF)(Genesereth 1998), Knowledge Machine (KM), Cycl and so on.

The methods of KR partially intersect with those of semantics and its goals are essentially the same, focusing on the formal representation of human knowledge while ignoring knowledge of other kinds.

2.1.4. Miscellaneous Approaches

The last group of approaches consists of miscellaneous works targeting general information theory although without any significant success

Numerous attempts at producing a feasible formalization and generalization of meaningful information are based on Shannon’s theory, e.g. (Lu 1999), (Losee 1997).

Generalizations of existing mathematical approaches consolidated into one general theory based on probability was proposed by Cooman (Cooman, Ruan, and Kerre 1995). Keith Devlin (Devlin 1995) tried to build a mathematical theory of information in the form of “a mathematical model of information flow”.

Tom Stonier (Stonier 1990) proposed a theory, in which he considered information to be a basic property of the universe much like matter and energy.

At the other end of the scale, there are approaches denying the mathematical core of information and describing the world as consisting of entities and interactions, a reflected relationship of which is information (see (Markov, Ivanova, and Mitov 2007) ). Many works targeting the theme are not precise enough and often produce a vague conception of the subject (Burgin 1997).

Building a unified information theory is considered by (Flückiger 1997) and (Fleissner and Hofkirchner 1996).

In summary, the theories developed in Semantics, Knowledge Representation as well as the Shannon’s Information Theory are specialized concepts designated for the representation of certain kinds of information or certain stages of information processing. The group of theories (point 4) targeting the creation of a united information theory has never been able to develop a practically usable universal apparatus of information representation due to their abstractness.

2.2. The Short History of Programming.

The fact that the general information theory has not been developed up till now by no way means that it could not be developed in principle. The true reason for its failure is the inability of the aforementioned theories in providing both the uniform view of all kinds of information and the universal data representation tools.

The answer to the not so rhetorical question -- what would such a theory and language be like -- can be found in programming, which used to be and still is the only source of practicable usable methods of semantically relevant formalizations of information. It may seem paradoxical that all aforementioned theories completely ignored the modern ideas developed in programming, but this disinterest has its roots in the recent history of computer science. The first theoreticians of programming were mathematicians and logicians who viewed it as a branch of mathematics. The result of their approach was an overly mathematized theory around the programming language. Some theoretical constructs like the formal language theory based on the ideas of the linguist Chomsky (Chomsky 1953, 1956, 1957) and theories of formal semantics have also been intensely scrutinized by information-related studies.

The break between computer science and information-related sciences occurred somewhere in the last decade of the twentieth century, when the software community quietly rejected high mathematized theories because of their ineffectiveness. Concentrating on the development of practically usable programming tools appeared to be much more efficient, but the essentially anti-theoretical way in which it was done basically excluded scientific discussions on the issue whatsoever. As a result, the information-related sciences were left out of the loop regarding new trends in computer science and the communication between these scientific domains stopped.

Yet more astounding was the failure of computer science to understand the reasons for its own fate.

Computer science in earlier times was an extremely lively discipline with the status of fundamental science. New ideas were intensively discussed in dozens of magazines and symposiums; multiple universities and firms created large numbers of new programming languages every year. All that at the end of eighties, when once feverish process of creating new languages lost its momentum bit by bit and nearly came to a complete standstill. The interest in new languages as well as the ambitions of their creators sharply declined.

From more than 2500 documented programming languages created in the last sixty years (O’Reilly Media 2004), only several dozen have been developed since the beginning of the nineties. Also, the goals of language developers underwent a change. New languages were no longer supposed to supersede their predecessors, as was often the case beforehand, but rather solve previously unknown programming tasks. A few remaining magazines and conferences discussing problems regarding the topic of programming language are rather tributes to the old glory supplying postgraduate students with publication opportunities than places for formulating and discussing new ideas.

The paradigm change in computer science was elevated by the radical transformation of the political and economic World Order brought about at the end of Cold War and rapid technical development.

The Cold War was, among other things, the golden age of science. Mutually perceived threats led to the immense boom in research and development with pioneering and innovative inventions and technologies created, fueled and sponsored by the military and its needs. Computer science was one of the main beneficiaries of this world order. At the end of the Cold War, research became increasingly more dependent on economic needs due to its privatization and as normally occurs with previously privileged branches, computer science was hit hard by the new reality.

The spreading PC revolution completely changed the needs software developers had to satisfy. Personal computers once considered expensive toys became full-fledged computers whose performance increased exponentially. They were readily available to all individuals instead of belonging to a small elitist circle of researchers; miniaturization and everyday requirements once again shifted the research focus away from high theory to practicability.

In addition, there was Moore’s law accurately predicting the doubling of computer performance every 18-20 months. Faster hardware made sophisticated software solutions caused by scarce resources of the earlier decades of the computer era obsolete. In fact, the PC revolution actively contributed to the demise of economically unsustainable research teams that had been responsible for software developments in previous decades.

On the other hand, all these, doubtlessly significant events, were not able to stop the scientific research because the basic motive of this very research was just as reasonable in a new economic environment. New languages were created in order to solve the fundamental deficiencies of old languages and as long as these deficiencies existed, research had to continue. Consequently, the only plausible explanation for stopping the eternal development process was fixing the problems that caused its prolongation.

The behavior of the software industry exactly fits this explanation. One of the richest and most innovation-friendly industries ever, the software industry invested countless billions of dollars in literally everything but hardly showed any interest in examining new approaches to programming languages. The same is true for the open source community who developed huge numbers of freeware of very different functionality but paid astoundingly little attention to this area.

Another argument in favor of this explanation is the overwhelming efficiency of Moore’s law. Though it is viewed as self-evident, it is not a necessary result in general. Just imagine how much less efficient the final impact of Moore’s law would be if a compiler needed a tenfold increase in computer performance when doubling the size of the compiled program.

Similar restrictions however never surfaced in reality in spite of the size of the software installation packages going from less than one megabyte at the beginning of the nineties to several gigabytes today. This unprecedented scalability was achieved without changing the basic programming means. Twenty years ago, the main compiled programming language was C, now it is its pure superset ― C++. The fact that the tremendous growth of the software size was implemented within the limits of the same language concept is the ultimate proof of the maturity of this concept.

Summing up the above, the older computer science became obsolete because the historical competition between different approaches to programming ended with the creation of the universal compiled programming language C++[3].

The development of programming languages started a few years after the appearance of the first digital computers because direct programming in machine code was exhausting and slow[4]. The first implemented programming languages were primitive interpreters like Short Code appeared in UNIVAC in 1952 (Schmitt 1988) and Speedcoding proposed for IBM-701 in 1953 (Backus 1954). The first working assemblers appeared at the same time further simplified programming by allowing the use of symbolic names instead of physical addresses and numerical codes of machine commands.

The first high level programming language FORTRAN (Formula Translator) appeared in 1956 and for the first time allowed programs to be written oriented on its logic and not on the implementation of machine code. IPL (Information Processing Language), Lisp (LISt Processing), COBOL (Common Business Oriented Language) and others closely followed. As early as 1957 it had already become clear that the problem of machine code programming was obsolete and the real problem hindering effective programming was the growing number of specialized programming languages.

No wonder the quest for a universal programming language was defined early on as the topmost priority of computer science. But what is a universal programming language? The answer to this question was not clear at the time, in particular because there were distinct interpretations of the notion of universality.

· The perfectly impracticable universality of the Turing machine was formally defined by the British mathematician Alan Turing in 1936 (Hodges 1983). A Turing machine was a logical device that could scan one square at a time (either blank or containing a symbol) on a paper tape. Depending on the symbol read from a particular square, the machine would change its status and/or move the tape backward or forward to erase a symbol or to print a new one.

This machine was considered by the theorists of programming as a real universal device, because it could presumably execute every existing algorithm. The fact that no one ever tried to build such a machine due to its absolute uselessness was never able to impress the elevated minds. In fact, this far-from-the-real-world understanding of universality only succeeded in making the issue more confusing without any positive impact. It also contributed to the demise of the former computer science because the science assigning the distinguishing role to such an unusable interpretation was increasingly detached from reality and practical needs, disregarding topical pressing demands.

· Another idealistic understanding of universality viewed mathematics as the philosopher’s stone of programming. According to the proponents of this approach, it was only necessary to find the proper formulas and every problem of programming could be effectively solved. Over time, this approach developed to the idea of declarative languages, which allow the expression of the logic of a computation without describing its control flow. Declarative programming often considers programs as theories expressed by means of formal logic and computations as deductions in that logic space.

Two kinds of declarative programming were once considered extremely promising. One was functional programming that treats computation as the evaluation of mathematical functions and the other, logic programming, which is based on mathematical logic. The most prominent programming language of this type is Prolog. While declarative languages generated a lot of noise in computer science, their impact on the programming practice was hardly noticeable.

· Two close to reality understandings of universality were actually used in practical programming. Here is how they were characterized in 1968 by Jean Sammet (Sammet 1969, 723) in her outstanding study of programming languages.

“It is no accident that the development of a specially created universal programming language is omitted from this chapter. It is my firm opinion that not only is such a goal unachievable in the foreseeable future, but it is not even a desirable objective. It would force regimentation of an undesirable und impractical kind and either would prevent progress or, alternatively, would surely lead to deviations. However, the possibility of a single programming language with powerful enough features for self-extension to transform it into any desired form is interesting to consider. (by any desired form, I mean all the languages in this book, plus any other which are developed subsequently) The techniques for this development are clearly unknown currently, but they could conceivably be found in the future”.

The notion designated in this citation as universal programming language is the concept of an universal high-level programming language. Such a language is designed to try to give programmers everything they could possibly want already built into the language. This understanding dominated programming several decades before it finally succumbed to a middle-level programming language (a single programming language in the citation), which used a minimal set of control and data-manipulation statements allowing high-level constructs to be defined.

The first project of the universal programming language was Algol-58 (ALGOrithmical Language), originally defined by the Zurich-based International Committee as the Esperanto of the Computing World in 1957. Due to its complex constructs and inexact specifications, Algol-58 and its successor Algol-60 were not implemented until the end of the sixties, but they sparked the creation of other languages such as NELIAC (Navy Electronics Laboratory International ALGOL Compiler, 1959), MAD (Michigan Algorithm Decoder, 1960) and JOVIAL (Jule’s Own Version of IAL, first implementation in 1961), the latter one being the first attempt to design a programming language that covered several application areas.

The more successful attempt of a general purpose language was PL/1 (Programming Language number One, originally NPL – New Programming Language, 1964) which was considered as a substitute for the majority of programming languages existing at that time, including FORTRAN, COBOL, ALGOL and JOVIAL. Despite being actively used by the software community, PL/1 fell short as a universal language mostly because of its over-complexity and thus the creation of new specialized languages continued.

The next attempt in developing the universal programming language was Algol-68, initially defined in 1968. The language was overly complex and seldom used.

The final and most expensive effort to create a universal programming language was Ada whose development started at the request of the USA’s Department of Defense in 1974. The department struggled to reduce the number of programming languages used for its projects, which reached around 450 at the time, despite none of them being actually suited for the department’s purposes. The result was an overly complex programming language first implemented in 1983. Similar to its predecessors Ada was not very successful and is now used limitedly mainly in military projects where it originated from.

The most interesting paradox was that the first really universal programming language was already implemented even before the start of Ada’s design efforts. The language C, created by Dennis Ritchie between the years 1969 and 1972 was “a system implementation language for the nascent Unix operating system. Derived from the typeless language BCPL, it evolved a type structure; created on a tiny machine as a tool to improve a meager programming environment” (Ritchie 1993). C was called a middle level programming language because it lacked many features characteristic of high-level languages. Though namely its parsimony was the true basis of its advantageous characteristics since the missing capacities were the true reason for the poor performance of high-level programming languages.

C provided such effective low-level access to memory that assembler programming became obsolete. Its capabilities also encouraged machine-independent programming. A standards-compliant and portably written C program can be compiled for a wide variety of computer platforms and operating systems with little to no change in its source code.

In 1983 Bjarne Stroustrup, then a researcher at Bell Labs, implemented the object-oriented extension of C called C++ (originally C with Classes)(Ellis and Stroustrup 1990) and the everlasting run for the new languages gradually lost its appeal. The universal compiled programming language was born!

2.3. The TMI View of the World

The conceptual system of TMI is based on the extension of notational system of C++, which together with its ancestor-subset C, constitutes the base of virtually all complex software as operating systems, programming software (compilers, interpreters, linkers, debuggers) as well as complex application software for various purposes. The unflappable dominance of C++ is in essence the ultimate proof of universality, reliability and consistency of the conceptual system of this language.

In the world of programming languages universality is however not absolute. C++ was able to replace other imperative compiled programming languages like COBOL, FORTRAN, PL/1, Ada, Pascal, though not the interpreter-based programming languages such as Java, JavaScript, PHP, Perl, Basic, command shell and so on. Though C++ has steadily demonstrated its influence also in foreign programming domains. Most of the object-oriented programming languages designed in the last 20 years are based on the C/C++ like syntax, in spite the fact that this syntax is more complex than that of Pascal.

C++ played a decisive role in establishing object-oriented (OO) programming[5], which was developed as the dominant programming methodology in the 1990’s. C++ was neither the first object-oriented programming language (which was Smalltalk-80) nor the only object-oriented extension of an existing language. Object-oriented features have been added to many existing languages including Ada, FORTRAN, Pascal and others but none of them have survived. Adding these features to languages that were not initially designed for them often led to problems with compatibility and maintainability of code.

C++ has avoided these problems because it was originally built as the minimally possible extension to already minimal C. The minimalist structure made this language extremely efficient and actually discouraged further attempts at producing plausible alternatives, except for languages like the programming language D (Alexandrescu 2010) claiming itself to be the better C++.

The current state of information-related sciences is in many ways similar to programming in its early decades. Like the latter, the former also uses a lot of specialized languages. Like the latter, the former understands that a universal language is possible (a natural language delivers an ultimate example thereof). Like the latter, the former possess distinct interpretations of universality. The difference is that the final outcome in programming (C++) is already known at this time and can also be used by information-related sciences for developing truly universal methods of information representation.

Of course, C++ is very far from being the universal representation language required by a general information theory. It is a programming tool possessing this strange thing called implementation seemingly missing by the natural languages. Its expression power is restricted to the representation of bit sequences allocated in the computer memory and the only expressible operations are sequentially organized manipulations with these bits. On the other hand, C++ as well as the methodology of object-orientation are unquestionable owners of the patent for universality (even if only in their restricted representation domain) and that is the only thing, which matters in this case.

Henceforth the approach of TMI consists of extending universal methods of information formalization developed in programming outside of their original domain.

***

According to TMI every real or imaginary entity can be viewed as an object whose behavior can be expressed in the manner of programming algorithms. For example, the algorithm of the Earth consists of the cyclical movement around the Sun and the rotation around its axis each day. In the same way, the structure of the Sun, Earth and their algorithms can be described in English they can also be described in the universal representation language T, which expresses these algorithms with the help of object-oriented notation based on the conceptual system of C++.

In essence, T can be considered as the extension of the expressing abilities of C++ linguistic signs to representation of entities exceeding the C++ representation world. The only entities, which can be referenced in C++ are bit sequences allocated in the computer storage and the only operations are sequentially organized manipulations with these sequences. Any entities exceeding these objects and these algorithms cannot be expressed in this language. This different with T, which allows composing miscellaneous discourses (exactly in the way of a natural language).

The definition of T is not associated with any particular implementation and consists of the definition of the language grammar, usage rules and core vocabulary in the way of a natural language like English or German. Notions of T like class, instance, procedure, variable, parameter and so on stand for nouns, verbs, adjectives, pronouns and other parts of speech.

T allows implementations with semantics of existing programming languages, like compiled languages (C++, Pascal) or interpreted languages (like Java, Basic, Command Shell). Ultimately, it allows the creation of a monolingual communication environment in which it is used as the only language for both programming miscellaneous tasks and composing diverse non-executable formal documentation.

***

While the term unambiguousness can be understood in a number of very distinct ways, here is its interpretation used throughout this work.

In TMI, an unambiguous text is a text that allows the only meaning in the given context. Thus, if there is a set of objects called objset, every element of which can be identified by some unique number, the text “objset[12345]” unambiguously refers a single item in this set.

This text will become ambiguous however if distinct items share the same identifier or there is more than one set with the name objset. Ambiguous texts are considered to be incomplete descriptions missing the necessary characteristics of the subject in question. To the contrary, an unambiguous representation of some entity has to contain as many of the entity’s characteristics as necessary in order to narrow down the number of represented meanings to only one.

T is able to produce unambiguous representations by enabling the unlimited addition of missing characteristics. The characteristics can be of various kinds like the location of the entity in time or space; the objects, events, states associated with this entity; the real or imaginable world to which this entity belongs and so forth.

***

Language and semantics. The traditional understanding of relations between a language and semantics (meaning) conceives the latter as a part of the former. This interpretation follows from both the history of the study of semantics, which was originally developed as a subfield of linguistics and the purpose of this study, which was traditionally understood as “the study of the meaning of linguistic signs” [“Linguistics”, in: Encarta].

Superior entities in TMI are knowing objects (communicators) using a language for exchanging semantics with each other. Communicators are persons, computers or any other entities that possess some semantics (knowledge) and want to exchange with other communicators.

The relationship between semantics and a language is equivalent to that between the freight exchanged by some senders/receivers and delivering services transporting it to the required locations. While a delivery service may be excellent at moving goods from point A to point B, it generally has no competence outside of that, because it neither produces nor uses the transported freight. The only way to understand the transported freight consists of studying its senders and receivers.

The approach of TMI consists of the explicit definition of communicators participating in every communication. Communicators are primal language objects that exchange information with the help of language code. Their structures and algorithms are quite similar to objects and algorithms specified in a programming language like C++, but differing from the latter they cannot be represented in an executable code.

3. The Information Theory

3.1. The General Model of Meaningful Information

3.1.1. Definition of Information

According to the standard theory of cosmology, literally everything – matter, space and time – started with the Big Bang. In the beginning, all matter and energy, which made up the universe was squeezed into an infinitely hot, dense, unstructured singularity. It then started to expand, became more structured, the fundamental forces were divided, matter formed to atom nuclei, leptons and molecules. The content separated into distinct components, parts of which were more stable than others and so preserved their form and size over long periods of time.

So was formed our universe, which we can consider as a super heap consisting of stable objects of different levels of matter organization and their heaps. The process of universe formation and evolution consists of permanent births, deaths, and changes to all entities of the heap. A stable object here is understood as a three dimensional item with mass, which is reasonably steady, has a location or position in space at any moment, and which can be changed by exerting force. The stable objects of the lower levels of the matter organization are inanimate physical bodies like stars, planets, stones, molecules, atoms and subatomic elements like hadrons, leptons etc. and those of the upper levels are living organisms of various complexity levels starting from unicellular bacteria up to human beings.

Under the influence of force, a stable object changes its speed, movement direction, starts/stops moving if it is exposed to the physical (energetic) influence of another object or process (like one object hitting another object and changing its movement etc.) and is distorted. A body in rest can come into motion if the balance of forces maintaining its immobility is disturbed, e.g. a bridge is stable because its weight is balanced by the counter-pressure of its pillars and it fails if one of the pillars is damaged.

A general characteristic of force-induced changes is that the effectual change occurs exclusively because of the energy produced or withdrawn by the causal action (as in the case of the falling bridge).

Stable objects are not really static but rather dynamic combinations of elements. Electrons revolve around atomic nuclei, quarks exchange gluons in hadrons; molecules are involved in chemical reactions in the body of cosmic objects and cellular organisms. Stated more precisely, stable objects are dynamic systems that are better at adapting to the environmental conditions around them and therefore can retain their form and stability. This ability to adapt means they are able to resist external and internal influences by either ignoring them or changing in order to counteract the influence. Since the ability to resist is limited, a stable object will collapse if the force influencing it exceeds a certain threshold, and it will be changed.

The life of a stable object can last from a fraction of a second to billions of years and during this time constantly moving and/or changing. The sequence of changes and movements of a stable object is defined in this work as the object’s algorithm that can be represented with the help of various means like pictures, schemes and texts composed in various languages.

The subject to be considered here is the causal relations between the changes of stable objects and their environments. The phenomenon of causality, famously characterized as the cement of the universe by David Hume (Davis, Shrobe, and Szolovits 1993), includes many miscellaneous causal relationships, but we will limit ourselves to changes occurring in objects under various influences in a Newtonian world.

According to the Random House Unabridged Dictionary (“RHUD” 2002), the term causality denotes “a necessary relationship between one event (called cause) and another event (called effect) which is the direct consequence (result) of the first.”

The term change is defined in the same dictionary as “to make the form, nature, content, future course, etc., of (something) different from what it is or from what it would be if left alone”.

The axiom of this work is that all causal changes in the real world are based on only four distinct schemes: two primitive causal relations occurring under the influence of physical forces and two complex ones. Changed entities are either complex stable objects including several components or systems thereof.

3.1.2. Change I driven by an Internal Force (internal change)

Changes of this kind are independent from the external environment of a changed object or at least considered to be independent on the level of the analysis of the object. A stable object alters its form, position or structure under the force produced by its internal processes. Such a change is the most frequent case of alterations among living organisms. Growth of living creatures, human mental processes or cellular division can be cited as examples.

Non-living physical entities are also changed in this way, especially those consisting of a dynamically balanced set of physical processes such as cosmic stars. Stable atoms disintegrate due to spontaneous radioactivity, which can take a very long time. This simplest causal mechanism occurring within a single stable object is designated here as internal change.

Internal change will be represented with the help of a horizontal rectangle that is split along the x-axis into two parts. The upper half refers to the changed entity and the bottom half describes the change to this entity. Because this object changes spontaneously without any apparent external force or influence, there is only one rectangle and no other objects or arrow directions contained in this graphic.

![]()

Figure 3‑1: Spontaneous change

3.1.3. Change II driven by the External Force (forced change)

The second basic kind of causal relation consists of changes that occur as a result of externally working forces. According to Britannica force is “any action that tends to maintain or alter the motion of a body or to distort it” (“Force” in Britannica Encyclopedia Ultimate Reference Suite 2009).

The mechanism of this change is simple: the influence of a force alters the affected object or its movement: A cosmic body changes its orbit due to the influence of gravity produced by some external entity. A bullet accelerates because of the powder explosion in the cartridge; glass breaks when it falls to the floor etc. This form of causal mechanism is called forced change here.

Forced changes represent the simplest form of a causal relation between two objects, the first of which ― a causal action of force ― delivers energy causing the effectual change of a stable object. Forced change is represented as follows:

Figure 3‑2: Externally driven change

The empty part of the upper rectangle illustrates the fact that a causal change can have an arbitrary origin. The arrow designates the direction of force. The vertical order of rectangles represents their relationship in time.

3.1.4. Change III activated by External Force (activated change)

This and the following causal mechanism are based on the sequence of primitive changes described above. The entities being subject to change are complex stable objects with several components and internal energy sources or systems consisting of several components and energy source(s). Furthermore, the changed objects are either organic organisms or inorganic systems of artificial origin. Inorganic objects of natural origin are too primitive for such complex behavior.

The activated change consists of changing a stable object (system) by external non-forceful influence. A changed object (system) has to possess (or have access to) an energy source and an externally controlled lock (switch), which opens and closes the energy flow produced/delivered by the energy source.

A typical example of this kind is an electric light activated by an electrical button or lever when someone (something) switches it on and the electrical bulb lights up. The sequence of actions includes the following four activities:

(1) external force presses the button;

(2) the button closes the electrical circuit thereby unlocking the energy source;

(3) electricity flows to the bulb;

(4) bulb lights up.

The general graphical schema of this change is as follows:

Figure 3‑3: Externally activated change

The difference between change II and change III is the addition of the two externally activated steps two and three. The lack of an arrow between them shows the missing energetic influence between the respective changes. This change is thus a sort of combination between change I and change II with the internal change being triggered by an external force.

The thing activating an internal change by external influence is referred to in this work as an activating switch or an activator.

Examples among biological objects are unicellular organisms registering the change of the environment by their sensors and changing in response the process of their metabolism. Components built on the principle of activated changes are integral parts of any technical system like electronic circuits.

A more elaborate example would be a traffic light at a pedestrian crossing (crosswalk). A person approaches a crosswalk, pushes a button, the traffic light changes to red and the crosswalk sign flashes green. The only work a person has to do is push a button and the rest is done by the internal component(s) and energy source(s).

The specifics of this type of causal relation are the energetic independence between the activating action and the resulting change which allows various activation-result pairs with the same internal mechanism. The energetic independence radically increases the survival chances and adaptability of systems implementing it.

Let us imagine there is a sea lagoon fed by an intermittent river. Some microorganisms, whose nutrition is delivered by the river’s water, have been able to adapt to the changeable conditions by slowing its metabolism after the level of oxygen in the water drops below a critical point. The internal mechanism registering the level of oxygen is the typical activator changing the behavior pattern of these microorganisms in result of the alternation of the external environment.

If some species of this microorganism are carried

by currents into the open sea, they encounter another changeable food source

consisting of unsteady sea currents of very salty water. Surviving in these

conditions requires another activator, which would regulate the microorganism’s

metabolism by reacting to the change in water salinity, while activator-

controlled basic structures can be left unchanged.

From the viewpoint of evolution, it is a much more efficient way of development than the development of a completely new microorganism, which would be necessary if the activating switches were not involved in the evolutional process.

3.1.5. Change IV communicated by External Force (communicated change)

The mechanism of activated change provides an essential part of flexibility relative to primitive causal mechanisms I and II, on the other hand, it also has its restrictions convincingly demonstrated by the traffic light example. In the form delineated above, these traffic lights are hardly usable on a busy street, because many pedestrians who want to cross the street will actually block the traffic by persistently pushing the crosswalk button.

In order to solve this problem our traffic light needs to be able to define the switching moment on its own, i.e. by changing the cause-effect direction. Assume that a person does not push the button, but the button will be periodically (e.g. once every 3 minutes) pushed against the finger of a person presumably pressing the button. If the finger blocks the button, the crosswalk lights switches to green for the pedestrians and red for cars. Otherwise, the color of the traffic light remains the same.

Certainly, this is not a very convenient schema for traffic lights and it does not give pedestrians utter satisfaction either. A more efficient solution to this problem consists of augmenting the switching schema by adding one more element – a passive mediator object (in this case a button together with associated components), which can be pressed by a pedestrian and queried periodically by a traffic light. By pushing such a button, the pedestrian only switches the button to another state which can be ascertained by the traffic light during the querying process. If the button is set, the traffic light unsets it and then switches the crosswalk light to green for the pedestrians.

This example demonstrates the work of information, which is a state of some passive object set by some actor and queried by some reactor in order to produce some resulting action. An actor has to set at least one state and a reactor has to distinguish between at least two states (the absolute minimum is a dichotomous variable – the state set by an actor and the lack thereof).

The following definitions will be used throughout this work:

The mediating object is a variable. Wherefrom follows a yet even shorter definition of information being conceived as a value of a variable used in an algorithm.

Communication is the process of interaction between an actor setting a variable’s value and a reactor identifying it and performing these or other actions as the result.

An actor is designated as an Information Setting Entity (ISE) and a reactor as an Information Driven Entity (IDE).

The resulting change constitutes the semantics of information.

Relative to the activated change, this kind of causal relation has been enhanced by the addition of three new steps inserted after the causal change.

Figure 3‑4: Communicated change

In this work, a variable is not considered a mathematical construction, but an object in the discernible world. Since the features of communicators and communications can differ, the structure of the variable can differ, too. Thus, in implementation where the crosswalk light directly differentiates between pressed and non-pressed buttons, the variable consists of the complete button mechanism. In alternative implementations where the button mechanism is connected to the input port of the traffic light’s internal processor the mediated variable is the input computer port and the button mechanism is an auxiliary appliance used by a pedestrian for setting this variable.

It is not required that a variable can be switched unlimitedly between its states. A variable is considered to be constant if it cannot change during its whole life span as e.g. a printed letter, which is essentially a picture painted on some surface. Other special cases are variables that can be changed irreversibly, i.e. only once, e.g., an undamaged pencil can be used as one sign and a broken pencil as another sign.

This understanding of information complies with the one actually applied in programming. The variables in programming are restricted to bits or bit sequences and the only two fundamental operations with variables are set and query, whereas all others are based on these two operations.

Another restriction in programming is that the actor and the reactor are usually the same, so the communication occurs not between different objects but between different states of the same computer.

3.1.6.  Summary of Causal Relations

Summary of Causal Relations

|

All four types of changes are given in Figure 3‑5. Each rectangle contains the stable object (if present) and its change. The order from top to bottom represents the timely flow. The arrows represent a force being exerted. |

|||||||

|

|

|||||||

|

|

Spontaneous change |

Forced Change |

Activated Change |

Communicated Change |

|||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Causal change |

|

Causal change |

|

Causal change |

|

|

|

||||||

|

|

|

|

|

|

|

|

Variable |

|

|

|

|

|

|

|

|

Setting |

|

|

|

|

|||||

|

|

|

|

|

|

|

|

Object |

|

|

|

|

|

|

|

|

Inquire variable |

|

|

|

||||||

|

|

|

|

|

|

|

|

Variable |

|

|

|

|

|

|

|

|

Answering |

|

|

|

|

|

||||

|

|

|

|

|

|

Object.Switch |

|

Object.Switch |

|

|

|

|

|

|

Unlocking energy source |

|

Unlocking energy source |

|

|

|

||||||

|

|

|

|

|

|

Object.energy_source |

|

Object.energy_source |

|

|

|

|

|

|

Forcing energy flow |

|

Forcing energy flow |

|

|

|

|

|

|

|

||

|

|

Object |

|

|

|

|

Object |

|

|

|

Resulting change |

|

Resulting change |

|

Resulting change |

|

Resulting change |

Figure 3‑5

3.1.7. Information Atoms

In general, there are three groups of processes associated with communication: information production (setting activity), transmission of information from one place to another and use (resulting activity) as depicted in the following Figure 6.

![]()

Figure 3‑6: Information-related activities

The transmission process (which was the only subject of Claude Shannon’s theory of information (Claude E. Shannon and Weaver 1948) can be reduced to nothing, as in the examples of the traffic light and microorganisms. In other cases, it can be very sophisticated and may include numerous steps, each of them producing its own information representations. The stages of the transmission process are designated in this work as information processing.

(1) By their nature, variables while differing from activators are also switches involved in two basic activities. The operation set switches a variable to some value, and the operation query investigates the actual value of a variable and changes the IDE by switching the inquiry mechanism.

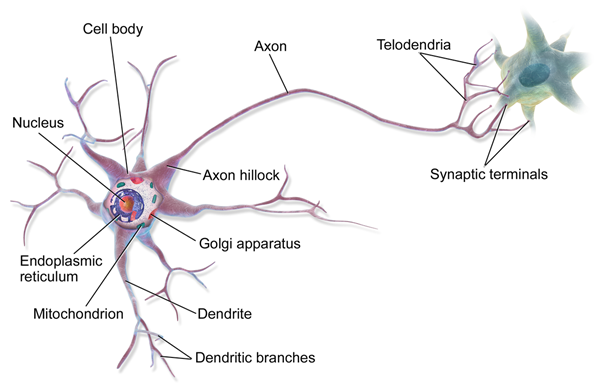

The difference between an activator and a variable is functional not physical. A computer bit, the most primitive variable on the computer level, functions as an activator on the level of a memory chip implementing it. Another good example would be a neuron in the brain acting as a variable, whereas a motor neuron connecting the brain with a muscle is an activator.

(2) Activators and variables represent two levels of information carriers. The activators are low-level entities which unite both set and query in the only action while the variables represent the high-level information processing in which these two functions are separated from each other. Essential differences between these two forms of information application are:

· While an activating switch immediately causes the resulting change, the setting of a variable is detached in time from the querying result.

· A single variable can cause many changes in miscellaneous ICE algorithms (see below under point 6.) versus the only change caused by an activator;

· Many variables can be queried with the help of a single inquiring mechanism, which is essentially a switch.

(3) The term varier is used in this work as the most abstract designation of an information carrier, which can be either an activator switch or a variable. Variers of naturally developed ICEs from the level of cnidarians (corals, jellyfish) are neurons.

In this work, a neuron is considered an activating switch changing between no-output and output states by reason of the relevant external signal for this neuron. Depending on the type of input signal, neurons are classified as changeable and non-changeable. An example of a non-changeable neuron is a motor neuron, which transmits impulses from a central area of the nervous system to an effector, such as a muscle. An example of a changeable neuron is a brain neuron, whose activating signal can be reprogrammed to respond to a number of signals(Best 1990).

(4) A stable object can only be considered an IDE if it contains at least one varier. The communication between an environment and an IDE occurs with the help of sensors, which are essentially activators. The architecture of an IDE containing only variables but no activators is physically possible but does not comply with IDE principles because such an entity is completely independent from the environment.

(5) Of both IDE and ISE, only the first inherently possesses the information related functionality whereas an ISE can be an arbitrary phenomenon of the organic or inorganic world with or without information related abilities. An IDE with the mediated varier as its interface constitutes the kernel part of the communication environment, which in general can get information from miscellaneous ISEs. An object possessing IDE capabilities with or without ISE capabilities is designated as an Information Capable Entity (ICE).

(6) As long as the same cause produces the same effect, the nature and structure of (a) varier(s) is irrelevant. If a certain lagoon is periodically filled with water without sufficient nutrients, the organisms living there can change their metabolism by reacting to miscellaneous environmental parameters such as water salinity, water temperature or water density with the help of the distinct inquiring mechanisms. The same is true in the case of artificial devices. The traffic light will function in the same way with miscellaneously designed switching buttons.

3.2. Information Capable Entities (ICE)

3.2.1. ICE Classification

All currently known ICEs were developed on Earth as a result of either the evolutionary process or human engineering. However, their features and structures are very different. Naturally developed unicellular and multi-cellular organic organisms were followed by social organisms and artificial devices created by the most complex natural ICEs ― human beings. Known ICEs can be separated into the following classes and subclasses based on certain fundamental characteristics:

(1) Biological organisms

Unicellular microorganisms ― the simplest form of naturally occurring ICEs

Multi-cellular organisms from non-structured sponges up to human beings

Social organisms ranging from primitive organizations like ant colonies up to various created human societies and states.

(2) Artificial objects and systems

Non-computer artificial objects and systems. These are entities, which use the simplest forms of information and are normally unable to produce information. Among them are all devices powered by electrical currents equipped by at least one controlling switch. Such devices also possess various sensors accepting these or other information.

Computers and non-computer systems containing one or more embedded processors (everything from dishwashers up to cars, airplanes etc.)

Components of all aforementioned entities, possessing ICE functionality.

Software entities.

The only characteristic common to all ICEs is that they have almost nothing in common. They are studied by distinctly different fields of science, each using its own conceptual apparatus which is hardly compatible with those of the other sciences. Furthermore, ICEs are not necessarily confined to any specific location in space as do social organisms or are seemingly immaterial in the way of software entities.

The task of defining a common denominator for all these systems is by far the most complex of all TMI undertakings. The solution consists of interpreting these systems as Information Capable Entities whose structure and functionality are defined in terms of their abilities for the use and production of information. Two ICE classes are worthy of special consideration.

First, software entities differ from other ICE classes in that they are not independent. The software is the part of the computers where it runs and cannot be understood without context of its computing environment. But on the other side, they are the only kind of ICE which is effectively expressed by formal (programming) languages and hence they are widely used in this work for the purpose of demonstrating IDE and ISE characteristics.

Another very specific kind of ICE is human beings, who are the most complex ICEs of natural origin. In this work, human beings are viewed as biological computer-controlled-devices following the idea first formulated by John Lilly in 1968 “Programming and Metaprogramming in the Human Biocomputer“ (Lilly 1968). Despite the unbelievable progress in computing occurred in the last forty years Lilly’s ideas have not lost their relevance. Listed below are excerpts from his book(1968, 20,21):

· The human brain is assumed to be an immense biocomputer, several thousands of times larger than any constructed by Man from non-biological components by 1965. The brain is defined as the visible palpable living set of structures to be included in the human computer.

· The numbers of neurons in the human brain are variously estimated at 13 billion with approximately five times that many glial cells. This computer operates continuously throughout all of its parts and does literally millions of computations in parallel simultaneously. It has approximately two million visual inputs and one hundred thousand acoustic inputs. It is hard to compare the operations of such a magnificent computer to any artificial ones existing today because of its extremely advanced and sophisticated construction.

· The computer has a very large memory storage and controls hundreds of thousands of outputs in a coordinated and programmed fashion.

· Certain programs are built-in, within the difficult-to-modify parts of the (macro and micro) structure of the brain itself. Other programs are acquirable throughout life.

· The human computer has stored program properties. A stored program is a set of instructions which is placed in the memory storage system of the computer and which controls the computer when orders are given for that program to be activated. The activator can either be another system within the same computer, or someone (something) outside the computer.

The view of human beings as devices controlled by a biocomputer allows them to be compared to artificial devices controlled by digital computers which, amongst others, enable the description of biocomputers in terms traditionally used for digital computers like memory, input and output ports, code and data, algorithms run on the computer etc.

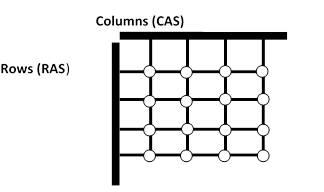

While both kinds of computers are similar in many characteristics, they are completely different in memory organization and the order of command execution. Digital computers have a memory consisting of sequences of bits organized in bytes and controlled by a central processing unit processing commands put in the computer memory, whereas biocomputers are built of neurons which, at least at first glance, function on completely different principles and do not have anything in common with the CPU of digital computers.

3.2.2. Biological ICE

The view of evolution detailed below does not coincide with Darwin’s theory, which is strongly based on biological development. The subject of the proposed view is the general algorithms of development regardless of whether biological organisms or artificial systems. The process of evolution is seen as the process of developing biological entities starting from the simplest biological organisms up to human beings. Evolution started on a still hot Earth, where it first produced systems of non-organic molecules under the influence of external factors united with organic molecules whose interaction once gave birth to the first unicellular organism and so on.

The objects controlled by the information influence differ in their inner organizations. The simplest ICEs are single-level objects with one or several sensors. These are systems like the aforementioned traffic light or such unicellular organisms, which can choose the moment to react by themselves.

The next level of complexity is programmed objects, which in addition to externally accessible information carriers, also possess internal variables whose values are set during the process of creating the ICEs (hard-coding) or during their lifetime (soft-coding).